In the following code blocks, we provide the actual GPT-3.5 Turbo (v11-06) prompts that we use for

generation and teaching for our gift-bag assembly setting:

Base LLM System Prompt with In-Context Examples

# Utility Function for "Python-izing" Objects as Literal Types

def pythonize_types(types: Dict[str, List[Dict[str, str]]]) -> str:

py_str = "# Python Enums defining the various known objects in the scene\n\n"

# Create Enums for each Type Class

py_str += "# Enums for Various Object Types\n"

for type_cls, element_list in types.items():

py_str += f"class {type_cls}(Enum):\n"

for element in element_list:

py_str += f" {element['name']} = auto() # {element['docstring']}\n"

py_str += "\n"

return py_str.strip()

# Initial "Seed" Objects in the Environment

TYPE_DEFINITIONS = {

"object": [

{"name": "CANDY", "docstring": "A gummy, sandwich-shaped candy."},

{"name": "GIFT_BAG", "docstring": "A gift bag that can hold items."},

]

}

# Base System Prompt -- with "Python-ized" Types

BASE_SYSTEM_PROMPT = (

"You are a reliable code interface that will be representing a robot arm in a collaborative interaction "

"with a user.\n\n"

"In today's session, the user and robot arm will be working together to wrap gifts. "

"On the table are various gift-wrapping related objects.\n\n"

"You will have access to a Python API defining some objects and high-level functions for "

"controlling the robot. \n\n"

"```python\n"

f"{pythonize_types(TYPE_DEFINITIONS)}\n"

"```\n\n"

"Given a spoken utterance from the user your job is to identify the correct sequence of function calls and "

"arguments from the API, returning the appropriate API call in JSON. Note that the speech-to-text engine is not"

"perfect! Do your best to handle ambiguities, for example:"

"\t- 'Put the carrots in the back' --> 'Put the carrots in the bag' (hard 'g')"

"\t- 'Throw the popcorn in the in' --> 'Throw the popcorn in the bin' (soft 'b')\n\n"

"If an object is not in the API, you should not fail. Instead, return an new object, which will be added to the API in the future. "

"Even if you are not sure, respond as best you can to user inputs. "

)

# In-Context Examples

ICL_EXAMPLES = [

{"role" : "system", "content": BASE_SYSTEM_PROMPT},

make_example("release", "release", "{}", "1"),

make_example("grasp", "grasp", "{}", "2"),

make_example("go home", "go_home", "{}", "3"),

make_example("go to the bag", "goto", "{'object': 'GIFT_BAG'}", "5"),

make_example("go away!", "go_home", "{}", "6"),

make_example("grab the gummy", "pickup", "{'object': 'CANDY'}", "7"),

]

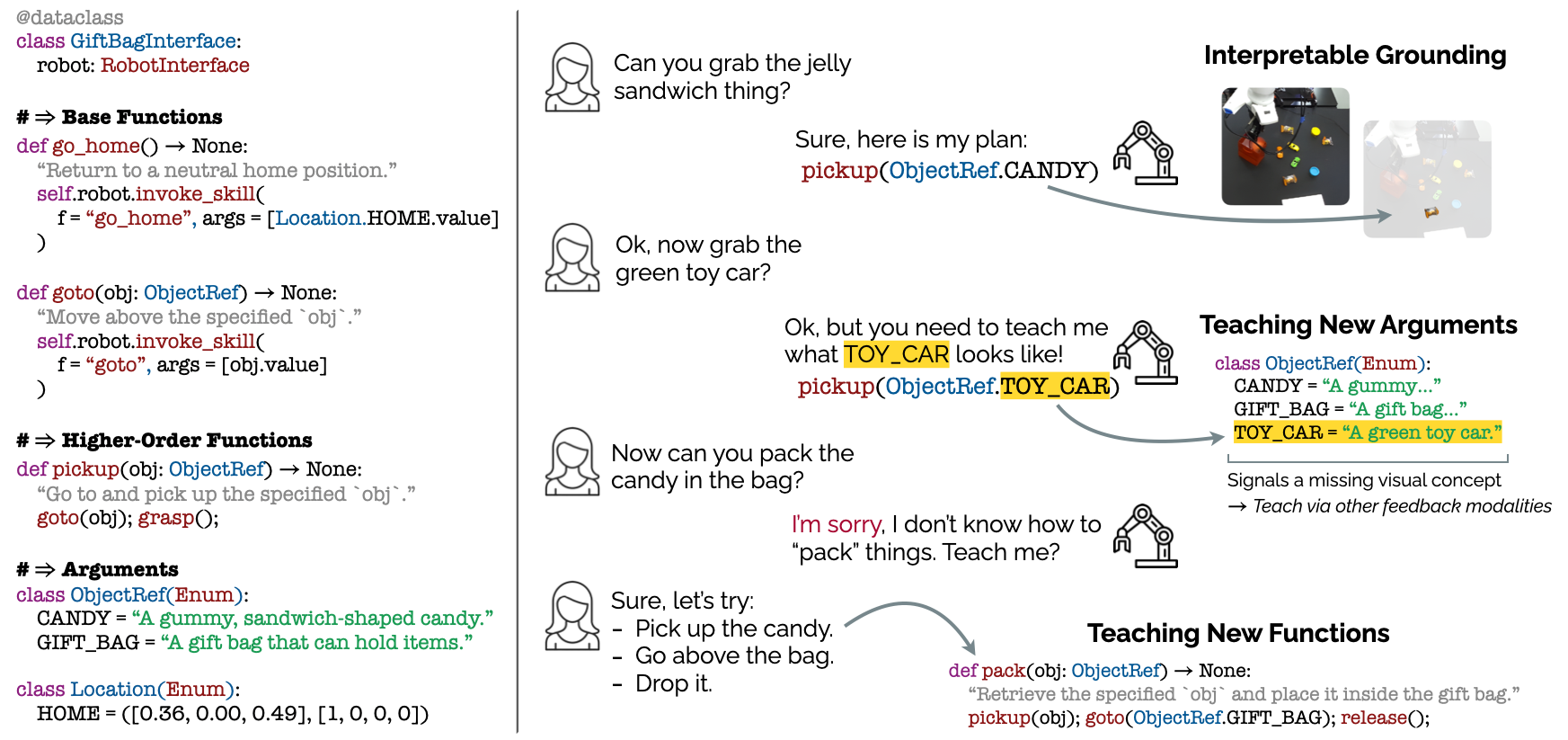

Note that the System Prompt explicitly encodes the arguments/literals defined in the API; these are

continually updated as new literals are defined by the user (e.g., `TOY_CAR`) following the

example above. The System Prompt also specifically encodes handling for common speech-to-text errors.

We pair this System Prompt with the actual "functions" (behaviors/skills) in the API specification. These

are encoded via OpenAI's Function

Calling Format, and are similarly updated continuously.

Function Calling Tool Specification

# Initial Seed "Functions" (Primitives)

FUNCTIONS = [

{

"type": "function",

"function": {

"name": "go_home",

"description": "Return to a neutral home position (compliant)."

}

},

{

"type": "function",

"function": {

"name": "goto",

"description": "Move directly to the specified `Object` (compliant).",

"parameters": {

"type": "object",

"properties": {

"object": {

"type": "string",

"description": "An object in the scene (e.g., RIGHT_HAND)."

},

},

"required": ["object"],

}

}

},

{

"type": "function",

"function": {

"name": "grasp",

"description": "Close the gripper at the current position, potentially grasping an object (non-compliant)."

}

},

{

"type": "function",

"function": {

"name": "release",

"description": "Release the currently held object (if any) by fully opening the gripper (compliant)."

}

},

{

"type": "function",

"function": {

"name": "pickup",

"description": "Go to and pick up the specified object (non-compliant).",

"parameters": {

"type": "object",

"properties": {

"object": {

"type": "string",

"description": "An object in the scene (e.g., SCISSORS)."

}

},

"required": ["object"]

}

}

},

]

Given the above, we can generate a plan (sequence of tool calls with the appropriate arguments) given a

new user instruction as follows:

# OpenAI Chat Completion Invocation - All Responses are added to "ICL_EXAMPLES" as running memory

openai_client = OpenAI(api_key=openai_api_key, organization=organization_id)

llm_response = openai_client.chat.completions.create(

model="gpt-3.5-turbo-1106",

messages=[*ICL_EXAMPLES, {"role": "user", "content": "{USER_UTTERANCE}"}],

temperature=0.2,

tools=FUNCTIONS,

tool_choice="auto",

)

Finally, a key component of our framework is the ability to teach new high-level behaviors; to do

this, we define a special `TEACH()` function that automatically generates the new

specification (name, docstring, type signature). We call this explicitly when the user indicates they

want to "teach" a new behavior.

Teach Function Specification

TEACH_FUNCTION = [

{

"type": "function",

"function": {

"name": "teach_function",

"description": "Signal the user that the behavior or skill they mentioned is not represented in the set of known functions, and needs to be explicitly taught.",

"parameters": {

"type": "object",

"properties": {

"new_function_name": {

"type": "string",

"description": "Informative Python function name for the new behavior/skill that the user needs "

"to add (e.g., `bring_to_user`)."

},

"new_function_signature": {

"type": "string",

"description": "List of arguments from the command for the new function (e.g., '[SCISSORS, RIBBON]' or '[]').'"

},

"new_function_description": {

"type": "string",

"description": "Short description to populate docstring for the new function (e.g., 'Pickup the specified object and bring it to the user (compliant)).'"

},

},

"required": ["new_function_name", "new_function_signature", "new_function_description"]

}

}

}

]

# Invoking the Teach Function

teach_response = openai_client.chat.completions.create(

model="gpt-3.5-turbo-1106",

messages=[*ICL_EXAMPLES, {"role": "user", "content": "{TEACHING_TRACE}"}],

temperature=0.2,

tools=TEACH_FUNCTION,

tool_choice={"type": "function", "function": {"name": "teach_function"}}, # Force invocation

)

The synthesized function is then added to `FUNCTIONS` immediately, so that it can be used

as soon as the user provides their next utterance.